Following Nvidia ‘s (NVDA) unimaginable quarter and robust steering increase, we expect shares of the AI-chip powerhouse can improve one other 14% from its file highs in the following six to 9 months. That’s typically our time horizon for a Club price target, which we’re boosting on Nvidia to $450 per share from $300. We’re conserving our 2-rating on the inventory, which signifies we might need to look ahead to a pullback earlier than shopping for extra. No kidding, proper? Nvidia closed Wednesday at $305 per share forward of the wonderful after-the-bell financials that pushed shares up as a lot as almost 29% to Thursday’s all-time, intraday excessive of $394.80 every. It’s nearly in the $1 trillion market cap membership. Jim Cramer, a supporter of Nvidia since not less than 2017, lately designated it the Club’s second own-it-don’t-trade-it inventory. ( Apple was the primary). Jim even renamed his canine Nvidia. Our new $450-per-share price target on Nvidia is about 45 instances full-year fiscal 2025 (or calendar 12 months 2024) earnings estimates. Nvidia has a bizarre monetary calendar and Wednesday night reported outcomes for its fiscal 2024 first quarter. While 45 instances is not low-cost on a valuation foundation at a bit over two instances the present valuation of the S & P 500, it is solely barely above the 40 instances common valuation that buyers have positioned on the inventory over the previous 5. In our view, it is greater than justified when factoring in the runway for progress that Nvidia has in entrance of it. That’s what we’re seeing Thursday as this newest spherical of upward estimates revisions additionally serves as a reminder that Nvidia, as a rule, has confirmed cheaper (or extra invaluable) than initially believed as a result of analysts have been constantly overly conservative in regards to the potential of Nvidia’s disruptive nature, which is now on full show because the undisputed chief in playing cards to run synthetic expertise. NVDA 5Y mountain Nvidia’s 5-year efficiency Jim has been singing the praises of Nvidia CEO Jensen Huang for years — to not point out masking many of the graphics processing unit (GPU) applied sciences already in place that enabled the corporate to capitalize on the explosion of AI into the buyer consciousness when ChatGPT went viral this 12 months. On the post-earnings name Wednesday night, administration made clear that they see issues getting even higher later this calendar 12 months. While they do not formally launch steering past the present quarter, the group mentioned that demand for generative AI and enormous language fashions has prolonged “our Data Center visibility out a number of quarters and we now have procured considerably larger provide for the second half of the 12 months.” Put merely, administration seems to be indicating that earnings in the second half of the 12 months stand to be even higher than in the primary half. The demand they’re speaking about is broad-based, coming from client web firms, cloud service suppliers, enterprise prospects, and even AI-based start-ups. Keep in thoughts, Nvidia’s first-ever data-center central processing unit (CPU) is popping out later this 12 months, with administration noting that “at this week’s International Supercomputing Conference in Germany, the University of Bristol introduced a new supercomputer based mostly on the Nvidia Grace CPU Superchip, which is six instances extra energy-efficient than the earlier supercomputer.” Energy effectivity is a serious promoting level. As we noticed in 2022, vitality represents a big enter price when working an information heart so something that may be completed to scale back that’s going to be extremely enticing to prospects trying to improve their very own profitability. The Omniverse Cloud can also be on observe to be out there in the second half of the 12 months. At the next stage, administration spoke on the decision in regards to the want for the world’s knowledge facilities to undergo a major improve cycle in order to deal with the computing calls for of generative AI functions, reminiscent of OpenAI’s ChatGPT. ( Microsoft , additionally a Club identify, is a serious backer of Open-AI and makes use of the start-ups’ tech to energy its new AI-enhanced Bing search engine.) “The complete world’s knowledge facilities are shifting in direction of accelerated computing,” Huang mentioned Wednesday night. That’s $1 trillion value of knowledge heart infrastructure that must be revamped because it’s almost solely CPU based mostly, which as Huang famous means “it is mainly unaccelerated.” However, with generative AI clearly changing into a new customary and GPU-based accelerated computing being a lot extra vitality environment friendly than unaccelerated CPU-based computing, knowledge heart budgets will, as Huang put it, have to shift “very dramatically in direction of accelerated computing and also you’re seeing that now.” As famous in our information to how the semiconductor trade works, the CPU is mainly the brains of a pc, accountable for retrieving directions/inputs, decoding these directions, and sending them alongside in order to have an operation carried out to ship the specified end result. GPUs, then again, are extra specialised and are good at taking up many duties directly. Whereas a CPU will course of knowledge sequentially, a GPU will break down a fancy drawback into many small duties and carry out them directly. Huang went on to say that mainly as we transfer ahead, the capital expenditure budgets coming kind knowledge heart prospects are going to be targeted closely on generative AI and accelerated computing infrastructure. So, over the following 5 to 10 years, we stand to see what’s now a few $1 trillion and rising value of knowledge heart budgets shift very closely into Nvidia’s favor as cloud suppliers look to them for accelerated computing options. In the top, it is easy actually, all roads result in Nvidia. Any firm of notice is migrating workloads to the cloud — be it Amazon Web Services (AWS), Microsoft’s Azure or Google Cloud — and the cloud suppliers all depend on Nvidia to help their merchandise. Why Nvidia? Huang famous on the decision that Nvidia’s worth proposition, at its core, is that it is the lowest complete price of possession resolution. Nvidia excels in a number of areas that make that so. They are a full-stack knowledge heart resolution. It’s not nearly having the most effective chips, it is also about engineering and optimizing software program options that permit customers the power to maximise their use of the {hardware}. In truth on the convention name, Huang known as out a networking stack known as DOCA and an acceleration library known as Magnum IO, commenting that “these two items of software program are some of the crown jewels of our firm.” He added, “Nobody ever talks about it as a result of it is onerous to know but it surely makes it doable for us to attach tens of 1000’s of GPUs.” It’s not nearly a single chip, Nvidia excels at maximizing the structure of your complete knowledge heart — the way in which it is constructed from the bottom up with all components working in unison. As Huang put it, “it is one other method of pondering that the pc is the information heart or the information heart is the pc. It’s not the chip. It’s the information heart and it is by no means occurred like this earlier than, and in this specific setting, your networking working system, your distributed computing engines, your understanding of the structure of the networking gear, the switches, and the computing programs, the computing cloth, that complete system is your pc, and that is what you are making an attempt to function, and so in order to get the most effective efficiency, you must perceive full stack, you must perceive knowledge heart scale, and that is what accelerated computing is.” Utilization is one other main part of Nvidia’s aggressive edge. As Huang famous, an information heart that may do just one factor, even when it could actually do it extremely quick, goes to be underutilized. Nvidia’s “common GPU,” nonetheless, is succesful of doing many issues — once more again to their large software program libraries — offering for a lot larger utilization charges. Lastly, there’s the corporate’s knowledge heart experience. On the decision, Huang mentioned the problems that may come up when constructing out an information heart, noting that for some, a buildout may take as much as a 12 months. Nvidia, then again, has managed to excellent the method. Instead of months or a 12 months, he mentioned Nvidia can measure its time supply instances in weeks. That’s a serious promoting level for purchasers continuously trying to stay on the innovative of expertise, particularly as we enter this new age of AI with a lot market share now up for grabs. Bottom line As we glance to the future, it is necessary to be aware that whereas ChatGPT was an eye-opening second, or an “iPhone second” as Huang has put it, we’re solely on the very starting. The pleasure over ChatGPT is not a lot about what it could actually already do however extra so about it being one thing of a proof of idea of what is feasible. The first technology iPhone, launched 16 years in the past as of subsequent month, was nowhere close to what we now have at present. But it confirmed individuals what a smartphone may actually be. What we now have now, to increase the metaphor, is the unique first-generation iPhone. If you’ll personal, not commerce Nvidia as we plan to, you must — as spectacular as generative AI functions are already —suppose much less about what we now have now and extra about what this expertise shall be succesful of once we get to the “iPhone 14 variations” of generative AI. That is the actually thrilling (and considerably scary) motive to carry on to shares of this AI-enabling juggernaut. (Jim Cramer’s Charitable Trust is lengthy NVDA, MSFT, AMZN, AAPL, GOOGL . See right here for a full record of the shares.) As a subscriber to the CNBC Investing Club with Jim Cramer, you’ll obtain a commerce alert earlier than Jim makes a commerce. Jim waits 45 minutes after sending a commerce alert earlier than shopping for or promoting a inventory in his charitable belief’s portfolio. If Jim has talked a few inventory on CNBC TV, he waits 72 hours after issuing the commerce alert earlier than executing the commerce. THE ABOVE INVESTING CLUB INFORMATION IS SUBJECT TO OUR TERMS AND CONDITIONS AND PRIVACY POLICY , TOGETHER WITH OUR DISCLAIMER . NO FIDUCIARY OBLIGATION OR DUTY EXISTS, OR IS CREATED, BY VIRTUE OF YOUR RECEIPT OF ANY INFORMATION PROVIDED IN CONNECTION WITH THE INVESTING CLUB. NO SPECIFIC OUTCOME OR PROFIT IS GUARANTEED.

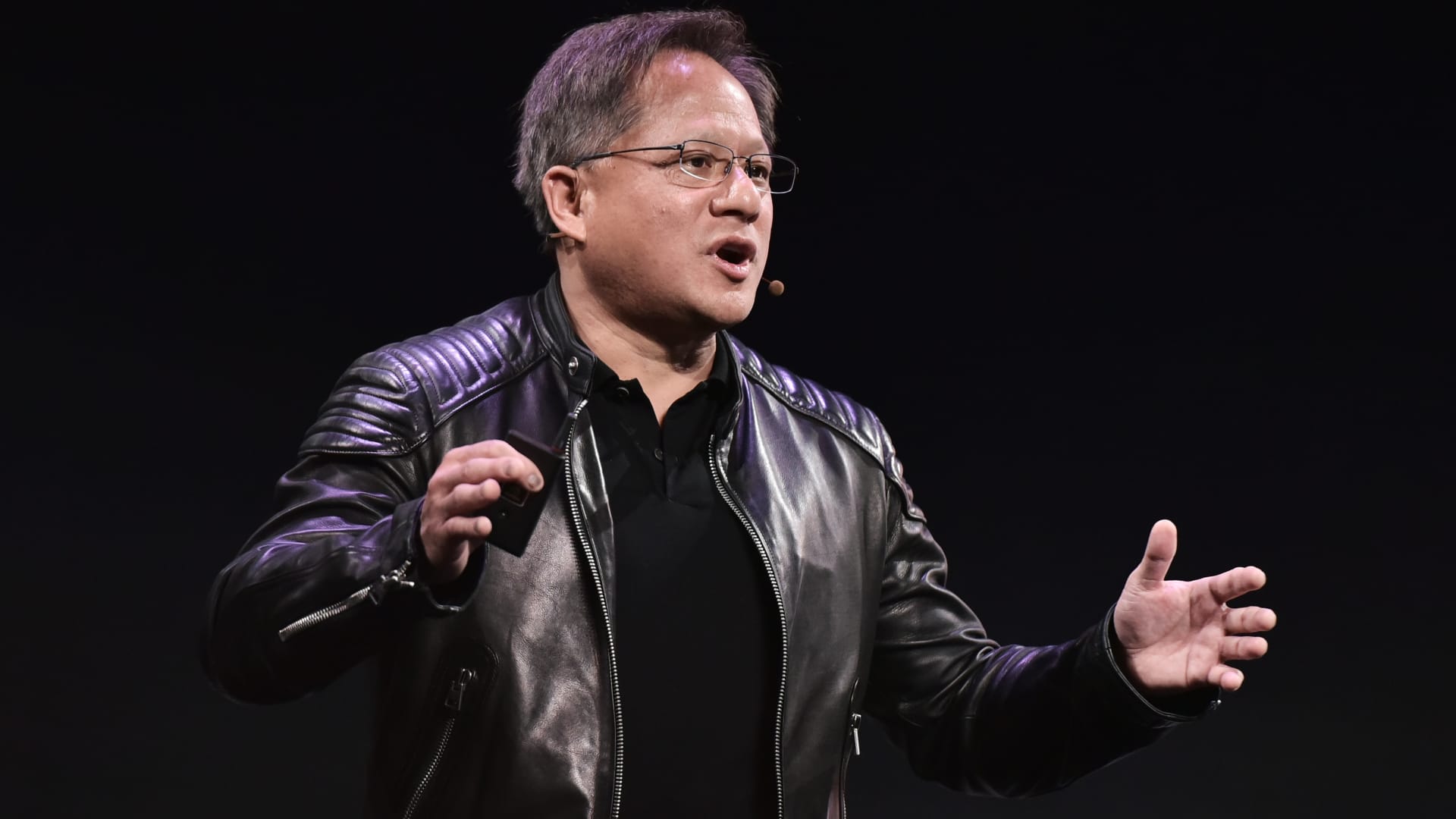

Nvidia CEO Jensen Huang sporting his typical leather-based jacket.

Getty

Following Nvidia‘s (NVDA) unimaginable quarter and robust steering increase, we expect shares of the AI-chip powerhouse can improve one other 14% from its file highs in the following six to 9 months.